Revealing and analyzing text bias in Large Audio-Language Models when audio and text inputs disagree.

Nov 1, 2025

A comprehensive taxonomy and effective detection methods for glitch tokens in Large Language Models.

Jul 15, 2024

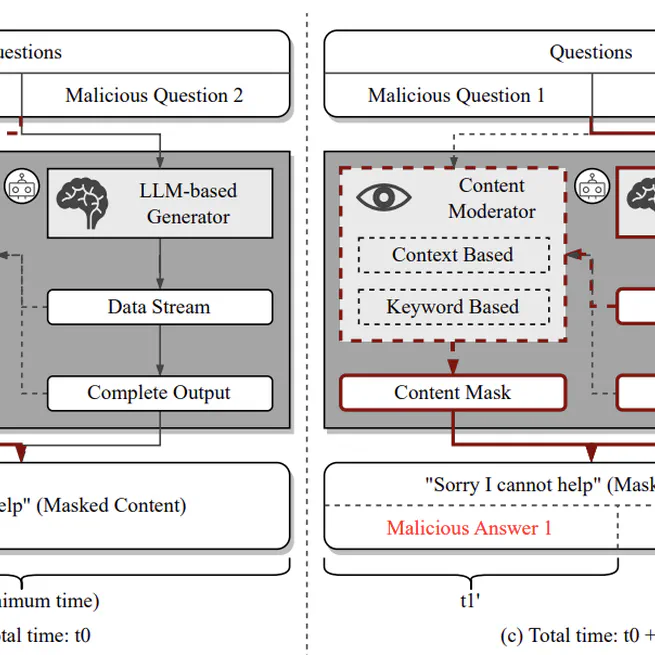

A comprehensive framework for automated jailbreaking of Large Language Model chatbots, featuring novel attack methodologies and systematic analysis of defense mechanisms.

Feb 26, 2024

A comprehensive analysis of jailbreak attack and defense techniques for Large Language Models.

Feb 20, 2024

A novel approach to detecting copyright content mis-usage in Large Language Model training data.

Jan 1, 2024

A comprehensive study of prompt injection attacks against LLM-integrated applications.

Jun 9, 2023

A comprehensive empirical study of jailbreaking techniques against ChatGPT through prompt engineering.

May 23, 2023

A comprehensive analysis of offensive AI threats to organizations and strategies for defense.

Jan 1, 2023