A Hitchhiker's Guide to Jailbreaking ChatGPT via Prompt Engineering

A comprehensive guide to jailbreaking ChatGPT via prompt engineering techniques.

Apr 20, 2024

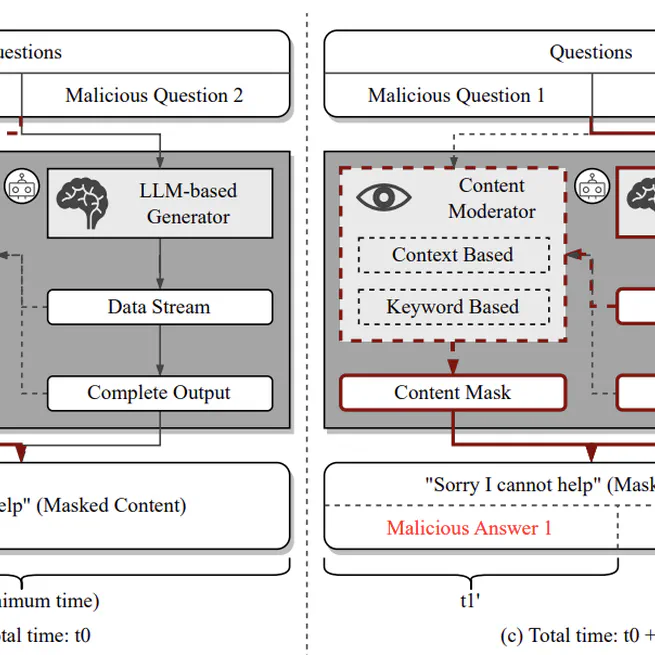

MASTERKEY: Automated Jailbreaking of Large Language Model Chatbots

A comprehensive framework for automated jailbreaking of Large Language Model chatbots, featuring novel attack methodologies and systematic analysis of defense mechanisms.

Feb 26, 2024

A Comprehensive Study of Jailbreak Attack versus Defense for Large Language Models

A comprehensive analysis of jailbreak attack and defense techniques for Large Language Models.

Feb 20, 2024

PANDORA: Jailbreak GPTs by Retrieval Augmented Generation Poisoning

Novel attack framework exploiting RAG mechanisms to jailbreak LLMs through retrieval database poisoning. Distinguished Paper Award winner.

Feb 1, 2024

Jailbreaking ChatGPT via Prompt Engineering: An Empirical Study

A comprehensive empirical study of jailbreaking techniques against ChatGPT through prompt engineering.

May 23, 2023